Details

-

Bug

-

Status: Resolved

-

Major

-

Resolution: Fixed

-

2.4.3

-

None

-

hadoop 2.7.3

spark 2.4.3

jdk 1.8.0_60

Description

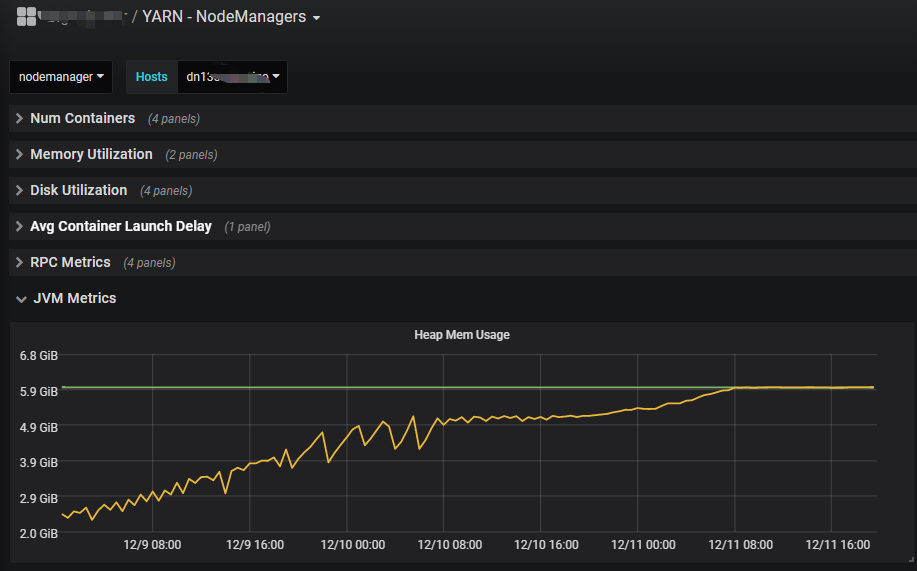

In our large busy yarn cluster which deploy Spark external shuffle service as part of YARN NM aux service, we encountered OOM in some NMs.

after i dump the heap memory and found there are some StremState objects still in heap, but the app which the StreamState belongs to is already finished.

Here is some relate Figures:

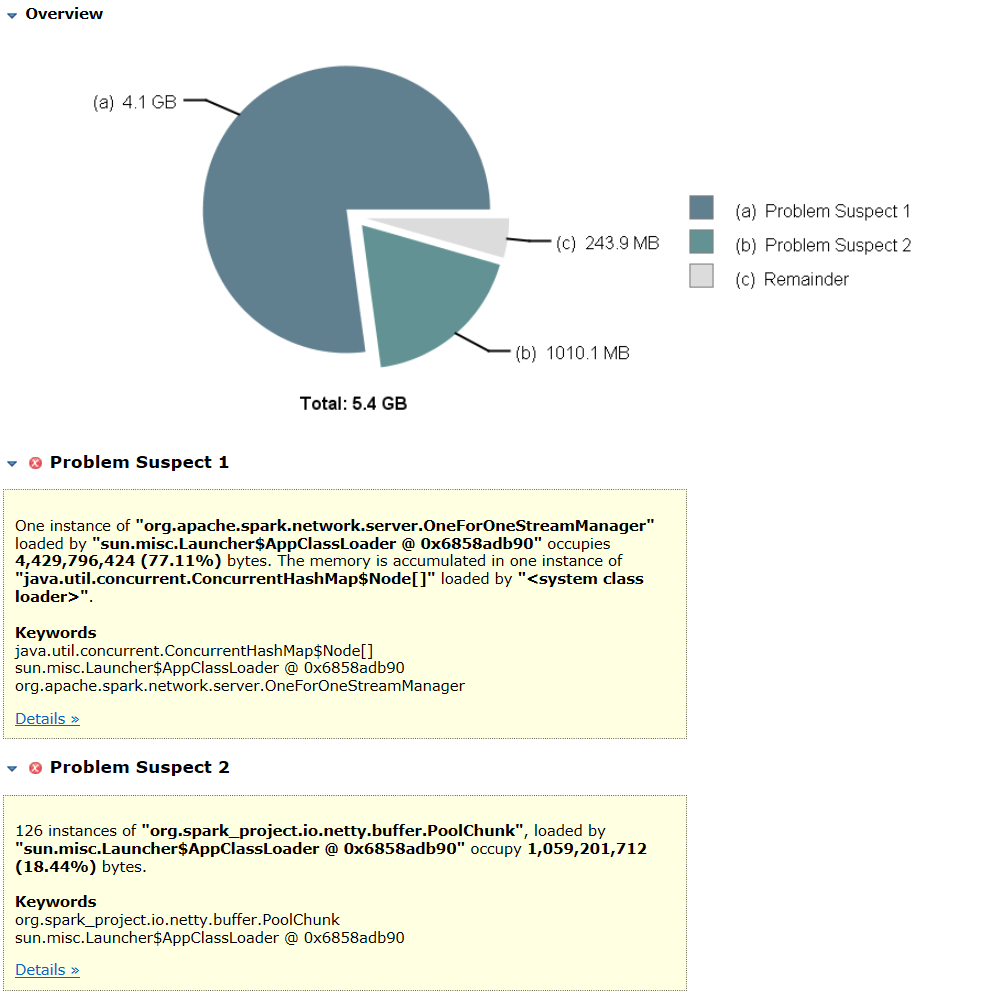

The heap dump below shows that the memory consumption mainly consists of two parts:

(1) OneForOneStreamManager (4,429,796,424 (77.11%) bytes)

(2) PoolChunk(occupy 1,059,201,712 (18.44%) bytes. )

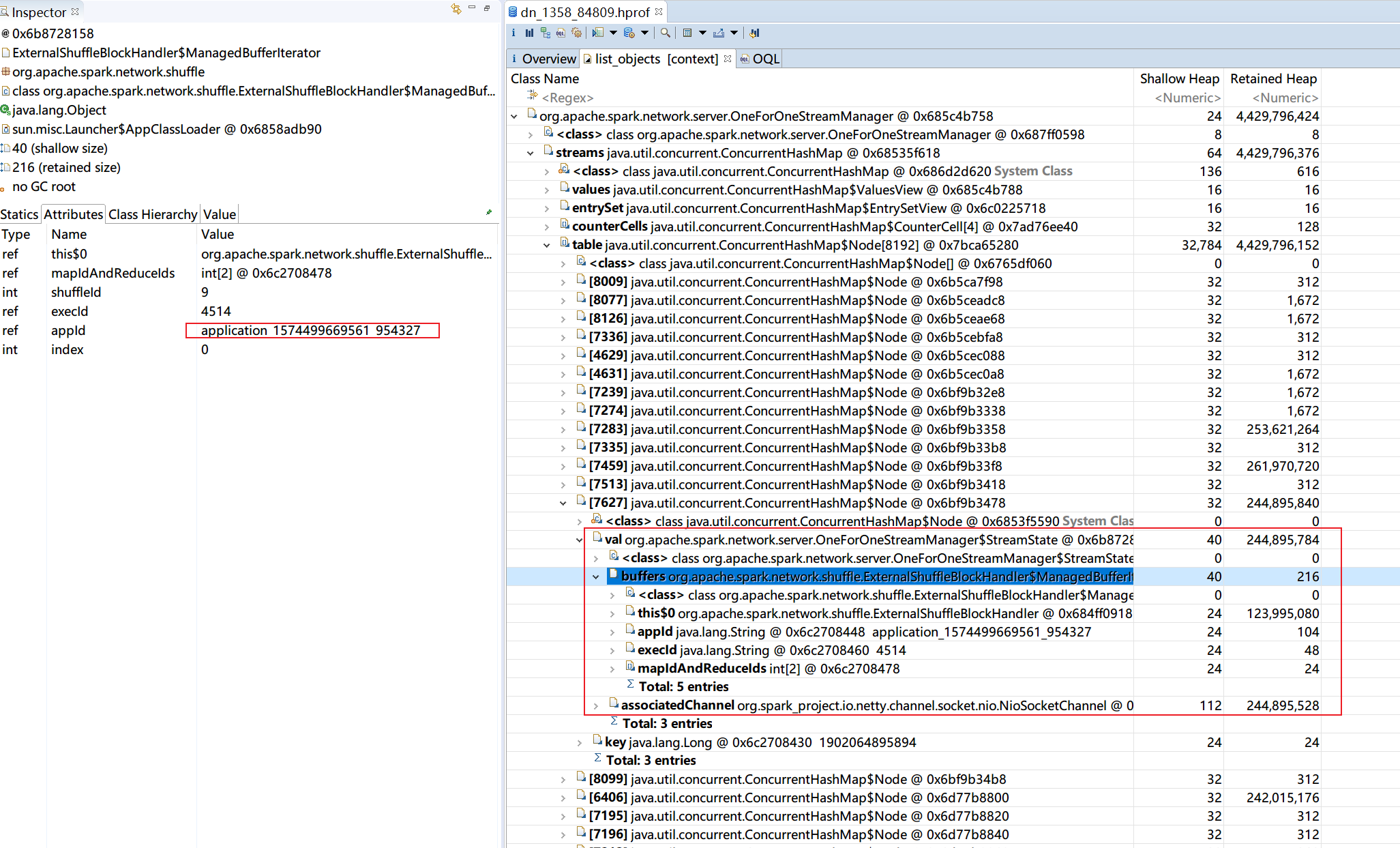

dig into the OneForOneStreamManager, there are some StreaStates still remained :

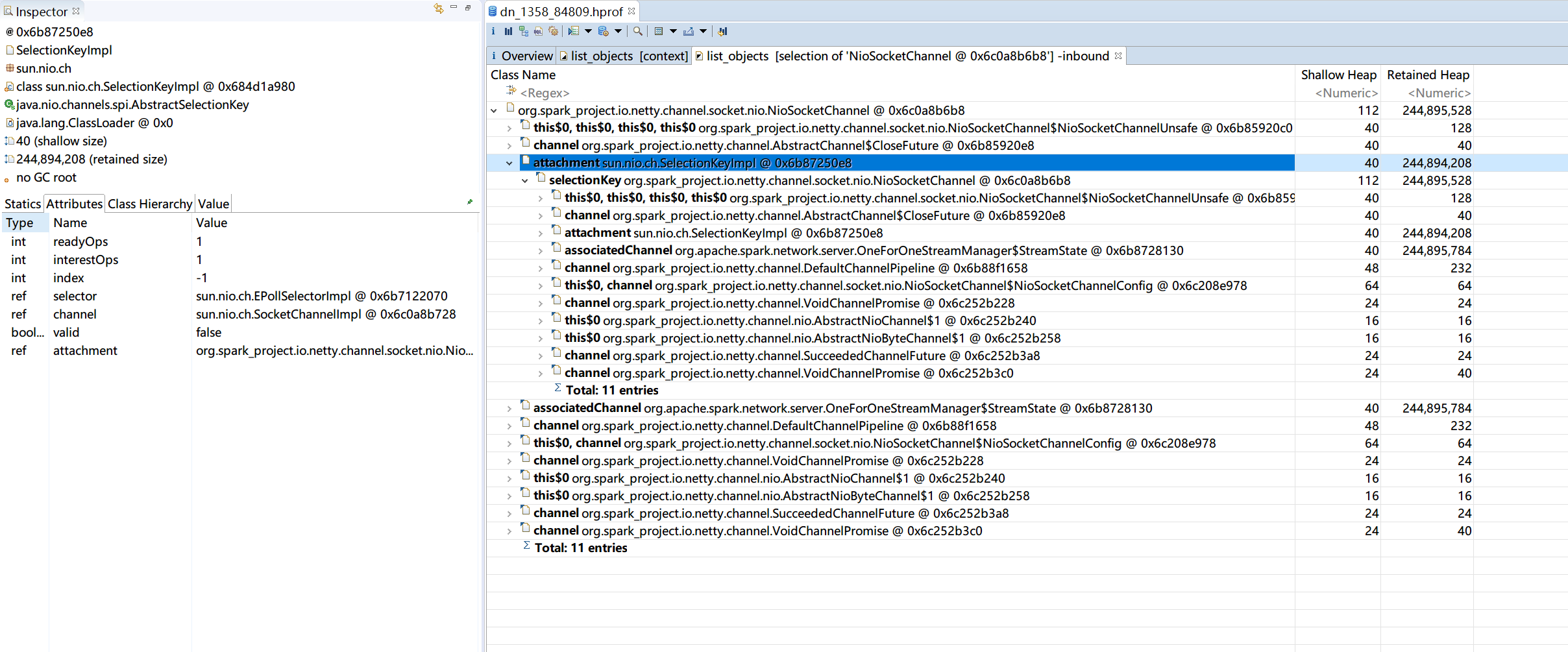

incomming references to StreamState::associatedChannel: